This is a post intended to cover some of the more advanced analyses techniques from a project ~5 years ago (2020-2021) which enabled greater-than-state-of-the-art accuracy with single-trial, non-invasive, non-visual-paradigm signal capture.

Motivation: I was attempting to build a non-invasive learning-assistant peripheral that could capture real-time brain signals (non-laboratory paradigm). There were a large number of hardware options I examined, but this will focus on the signal processing side.

Summary of Approach:

Signal preprocessing/preparation/classification:

- Harmonic decompositions

- Physiological confound removal

- eyelid blinks,

- head movements,

- eye movements/saccades

- Harmonic-based ML/NN approaches

What I was NOT doing:

- Relying on paradigms that use

- (a) stimulus flashing (SSVEP) — these require staring at a flashing screen increasing eye strain, reducing its potential consumer use,

- (b) sustained hemispheric activation (motor imagination) — these require sustained, unnatural effort out of the user, radically reducing its potential use.

- (c) Relying on non-stimulus-tagged signals (like general engagement/task readiness) — these signals (and the rest of the ones in this list) have very little applications versus stimulus-linked brain signals.

Targets:

- Familiarity/novelty

- Correctness/error (the participant’s perception of correctness)

Summary of Achievements:

- Improvement of single-trial AUCROC from ~ 0.505, ~0.51-.52 (state-of-the-art reported at the project time) for above targets, to 0.54, 0.56.

- Obviation of traditional signal processing methods such as bandpass, notch/high-pass filtering.

- Minor Achievements:

- Demonstration of ‘harmonic’ kernels within CNNs/transformer architectures

- Leveraging of phase in classification (traditionally, at least at time of this project, amplitude or coherence signals are generally used)

- Successful demonstration of single-trial capture without laboratory conditions (headrest, lab-grade EEG, etc)

- Cleaning signal using a small calibration phase and reversible spectral decompositions

Methods/Projects

Briefly:

- Artifact removal: Spectral Principal Component Analysis (PCA)

- I also coded spectral ICA, CSP, and other approaches, but ended up using spectral PCA for head movement artifacts. The channels x time are decomposed into channel x frequency x time with a windowed reversible FFT procedure, individually PCA”d, and the channel with the highest correlation against a PCA’d component of the inertial sensors (3 sensors, one for each dimension) is zero’d out. The entire process is reversed (IFFT, etc) and a clean signal without head movement artifacts is the result.

- This was used in a real-time mobile set-up (using an NVIDIA Jetson, OpenBCI chip, and a custom-built eye-tracker with a ~250 Hz framerate).

- Eye-fixation tracking (sometimes called ‘foveal’ related potentials) to tag data with micro-eye-fixation events

- For my main project, I used the mobile eye-tracker (pair of glasses with cameras pointed at the eyeballs to capture any eye movement at a high frequency), and some custom python software to grab eye-fixation events: any ‘resting’ of the eye in a direction for >50 ms (I think? This is from memory) was counted as a micro-fixation and marked in the brain data in real-time. Any blink was counted as a ‘new eye event’ as well.

- Rationale: The occipital cortex is basically a giant projection screen so anytime your eyes move (usually in a jerky/saccade, way more than you realize), your swinging your camera and changing the image being processed on the ‘projection screen’/occipital cortex, which then feedforwards into parietal, etc. Not capturing eye saccades is a major oversight of most non-invasive research and conflates potentially 20+ events/second together in one smear as you try to decode brain signals.

- For the free-form cognitive tracker project (no planned stimuli events), I used a canonical ERP waveform (mean ERP curve from more fixed, less noisy conditions) was basically fitted. Peaks and lags of P1, N2, P3, P6, etc were all reported, along with fit values.

- Comparisons between free-reading, watching an old movie versus new movie, staring/looking around and trying to mentally solve problems confirmed significant correlations between ERP measures of:

- novelty/familiarity

- memory encoding/decoding

- visual processing/less or non-processing

- computing/thinking vs more passive

- etc

- For my main project, I used the mobile eye-tracker (pair of glasses with cameras pointed at the eyeballs to capture any eye movement at a high frequency), and some custom python software to grab eye-fixation events: any ‘resting’ of the eye in a direction for >50 ms (I think? This is from memory) was counted as a micro-fixation and marked in the brain data in real-time. Any blink was counted as a ‘new eye event’ as well.

- Attentional-effort capture in a video game

- For another project used eye-tracking in a continuous task (not using a eye-fixation/single-stimulus approach), I captured and used ML to predict outcomes on a video game (an autobattler; a turn-based game, with an activity-intense preparation phase between rounds) to predict that round’s outcome. The results are stochastic and based on a lot of factors outside of player’s control (the ML model ended up predicting about 3 rounds ahead, due to the lagged nature of decisions to outcome in the game), but I had a great deal success on a small dataset in predicting outcome based on an attentional measure I’ll describe here.

- I used CCA (Canonical Correlation Analysis) which essentially dimensionally transforms two datasets simultaneously in order to maximize the correlation of their latent spaces. You can ‘train’ them on a calibration dataset (say, one long game of the user), and then use the learned transforms on future datasets to predict. In this case, the neural analysis was fairly theory-agnostic as I simply allowed whatever signals that was linked to driving eye movement (derivative of movement) or receiving information from the changes in eye position, to be selected (with a simple ML model to predict outcome from those signals). In retrospect, I suspect attention related to visual processing was what we were capturing here.

- Memory encoding/decoding

- This project reproduced a known memory literature paradigm (where you present: (a) novel and familiar stimuli — I believe MtG art; (b) and correct and incorrect labels, in a counterbalanced presentation as not to rely on ‘rarity’ for error signal capture as many experiments do)

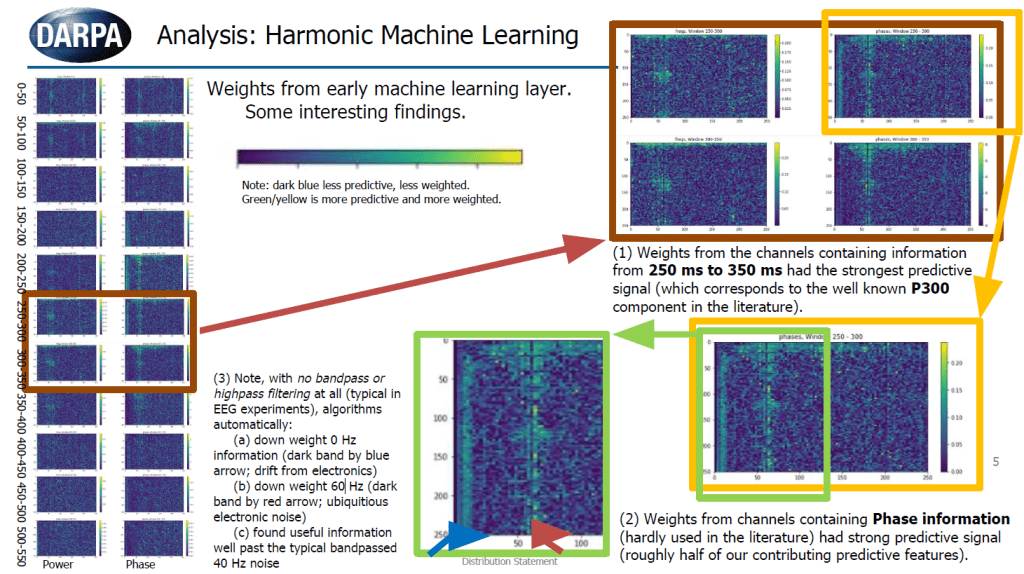

- Interestingly, with no signal preprocessing such as bandpass filtering of 60 Hz (typically, you have lots of noise at that frequency in the US because of powerline noise), the neural network framework automatically downweighted to 0, the inputs from 60 Hz (and 0 Hz, the DC offset), and, interestingly, many bands above 40 Hz which is traditionally filtered out in EEG signal processing.

5. Harmonic neural networks

- I think this one warrants its own project description (publication/github, if I were more motivated), but I essentially attempted to architect a new foundational model leveraging ‘harmonic’ advantages:

- dozen different ‘harmonic’ kernels [sin, sinc, cosine, etc ombinations)

- correlational comparisons against ‘internal’ functions (vs weighted summation of inputs). So instead of the typical feed-foward paradigm ax1 + bx2 + cx3 + … -> next weight, I was using a much more complex correlation function (but a simple function, from a statistical point of view), and other combinations of normalized dot products..

- What made some of these attempts interesting from a ‘biologically inspired’ neural network point of view, was methods that were ‘phase invariant’ — that is, they were ‘learning’ the wave pattern, while ignoring its temporal position. That if you shifted a wave to the left or right, you’d still get the same result.

- more of a ‘diffusion’ network (although I wasn’t aware of them, nor ‘physics informed’ neural networks, from 2018-2020, at the time I worked on this) in that a single neuron would produce a vector, rather than a single number.

- So the internally learned parameters of the neuron would produce/generate a function that would be correlated/dot-producted/etc against the vector of inputs and produce a single number.

- I ended up interleaving traditional neurons (feedforward/CNN) with these harmonic neurons.

- I later leveraged residuals, transformer attention, although I resisted from adding this immediately since they can do quite a bit on datasets. I generated complex and noisy harmonic signals, then downloaded some timeseries datasets (power data, stock, etc)

- Motivation: I should have started with this but its well known many time-series have harmonics in them (especially brain data). Instead of typical approaches (fourier, wave packet, etc decompositions/manual analyses), I was trying to build something fundamentally scalable, so that ‘waves inside of waves’, and so on, could be discovered automatically, by a network and enough data. Prior to this, ~2018, I built a recursive fourier decomposition time series predictor/extender that worked better than Prophet (Facebooks time series tool) on some specific datasets that I had, and this was a continuing development of trying to expand that advantage.

- Context: For this particular brain-reading project, I had many single-trials of brain signal captured (from when the participant first sees the word/image, to about 600 ms after), trying to predict whether they’d seen it before (familiarity), and whether it was correctly or incorrectly labelled. (Figure above) This harmonic approach on this dataset had several advantages:

- 0 Hz/DC/intercept — traditionally removed manually — network automatically weighted it to 0 finding no useful signal.

- 60 Hz — traditionally removed manually — network automatically down-weighted to 0.

- 250-400 milliseconds shows a bunch predictive weights for frequencies ~35-80 Hz (and sub-bands, ~40-60 Hz) which corresponds to the traditional ‘P300’ for discrimination tasks, but automatically found, with a lot more nuance.

- Another interesting advantage is that many frequencies above 40 Hz showed useful signal, often low-passed out in typical EEG analysis.

- Finally, phase also showed predictive signals (right boxes) which is usually ignored in typical analysis or not shown to be predictive.

- These combination of advantages with this approach showed a 0.54-0.56 single trial AUCROC which sounds pretty crappy but is nearly ~5-10x better than typical EEG literature in the last 10 years for theses signals (~0.505 – 0.51) that still can report significant p-values when aggregating all trials.

- My follow-up plan was to leverage high-quality eye-tracking, more sophisticated fundamental models, more context labelling, more data, and some other advantages I can detail in a future post (and have already in multiple rejected proposals) to increase the signal to 0.7-0.8 (or greater) where it becomes more usable and less frustrating from a user-perspective.

- As an example, eye-tracking is a colossal injector of noise, almost universally overlooked (except one lab in Germany), often ‘corrected’ via strict participant instruction and ecog monitoring. Any EEG wearable in my opinion, should have millisecond eye-tracking precision, since the data occupying the back 1/3 of your brain (occipital cortex) is being inhaled through your eyeballs (moving cameras, ~60M neurons each), with zero-context tracking. Your eyes can micro-saccade 20x a second, so it is doable and capturable by current technology.

- My follow-up plan was to leverage high-quality eye-tracking, more sophisticated fundamental models, more context labelling, more data, and some other advantages I can detail in a future post (and have already in multiple rejected proposals) to increase the signal to 0.7-0.8 (or greater) where it becomes more usable and less frustrating from a user-perspective.

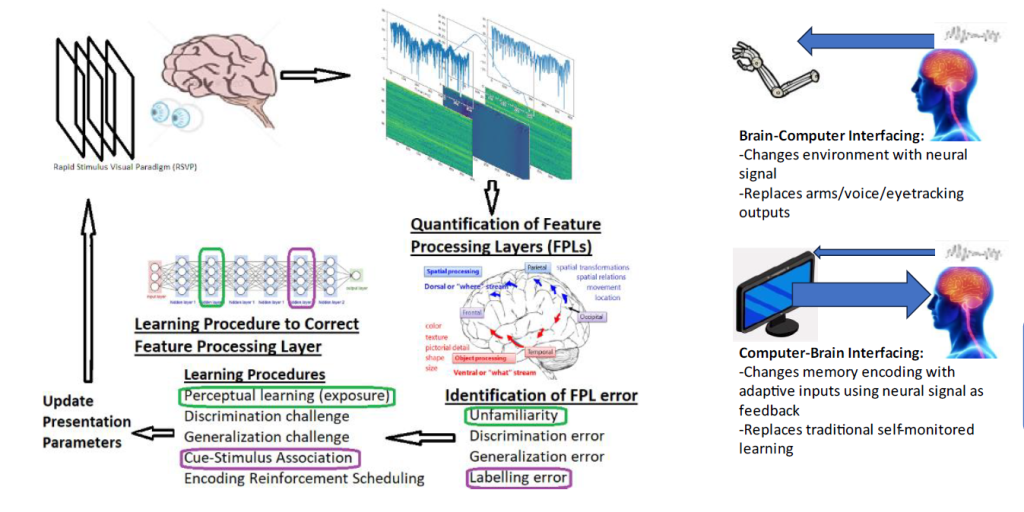

More motivation on approach

The theoretical reason to not use RSVP/luminance modulations (perceptual) or extensive training (pre-motor), is because the entirety of the decision-making processes (roughly, parietal/frontal/etc) is ignored, despite being the most valuable cognitive target. I’m also discounting ‘state’/’readiness’ which can come from frontal sources since they are usually captured in a way that is not stimulus-locked.

Historically and currently, the use cases have been:

- (1) Simple mental state capture that offers little capability for meaningful feedback other than basic attentional reminders or difficulty throttling based on physiological strain. These “effort states” (generalized arousal, frustration, engagement) which can be captured via other physiological measures, aren’t specific enough to be used as feedback measures for the processes of human learning.

- (2) Non-invasive brain-computer interface (BCI) technologies that capture very small bit signals from pre-motor planning areas and motor areas, well after the terminus of any brain judgements and memory comparisons, milliseconds before transmission (or inhibition of transmission) to the peripheral nervous system.

- (3) Attentional fixation of the eyes on a blinking stimulus captured via sustained visual evoked potentials (SSVEP), that essentially capture eye fixation and gaze detection.

The actual technological vision of computer-to-brain interfacing (say, for learning) would be a granular tracking of stimulus as it is processed by the brain; versus, say, the approaches mentioned in the previous list which are either: stimulus-agnostic or purely perception, not cognitive/decision-oriented.

Feature Processing Layers (FPLs)

Even current invasive device research currently focuses on grabbing motor cortex signals, designed to replace the spinal cord -> hand -> mouse/keyboard that 99%+ potential users have an optimal working mechanics. This project instead focused on feature processing layers (“FPLs”) which are the processes by which stimulus input (say a flashcard, or test question, a scenario, etc) is processed by the brain and turned into an output (encoded memory, an answer, a decision, etc).

Metacognitive Monitoring

This project also attempted to push forward ‘automated learning’ or ‘augmented human learning’ within human brains. I found my old report, so I’ll just paste some of it here:

- Successful demonstration of the fundamental research will enable a path forward by which higher levels of human processing can be bypassed, and rote memorization and routine training can be conducted rapidly using single-trial FPL signals from wearable neurotechnology. Our lower level perceptual systems can operate in excess of 100 Hz for detection of perceptual familiarity, upwards to 20 Hz for target detection, group identity, and semantic labelling. Our higher-level metacognition systems operate much slowly. While these metacognition systems useful in our dynamic day-to-day environments and novel problem solving situations, this system is highly irrelevant and outdated for the vast majority of personnel training and student memorization that still occurs in our military, schools, and workforce. Being able to directly read-out the FPLs is a transformative capability that will enable the building of rapid, adaptive visual presentation software that will allow successful ‘programming’ of them, enabling greatly accelerated memorization, training, and learning. Successful transformative technology that accelerate human learning is one of the largest force-multipliers government, military, business, and academics can work on for national and human progress.

Obviously the longer term goal is much more long-term/ambitious, but the methods/experiments section has some specific methods that may be of use to active research/development efforts.

Unrelated Addendum

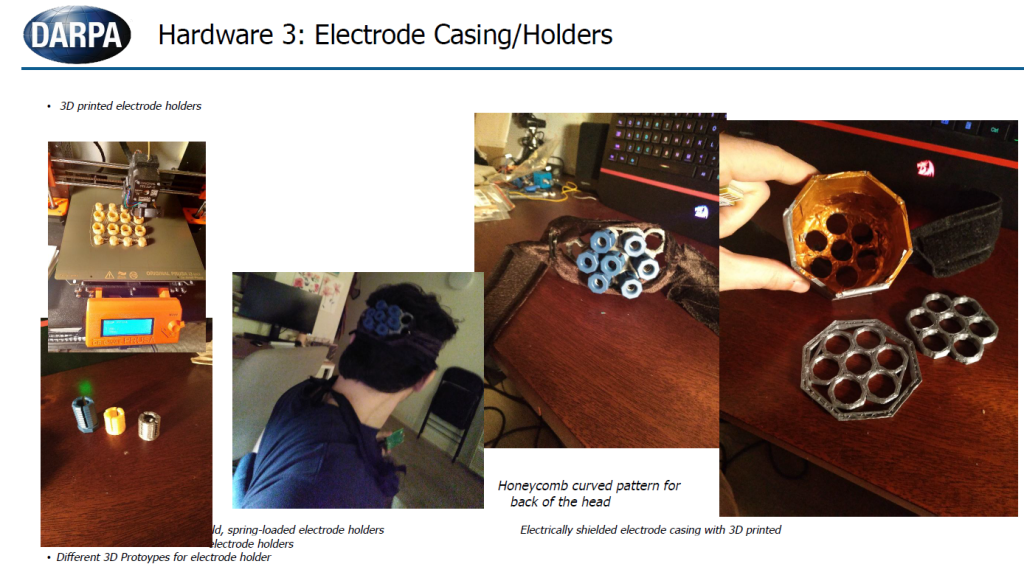

Hardware:

Some hardware stuff:

- Eye tracker (3D printed)

(~220 Hz of eye position detection)

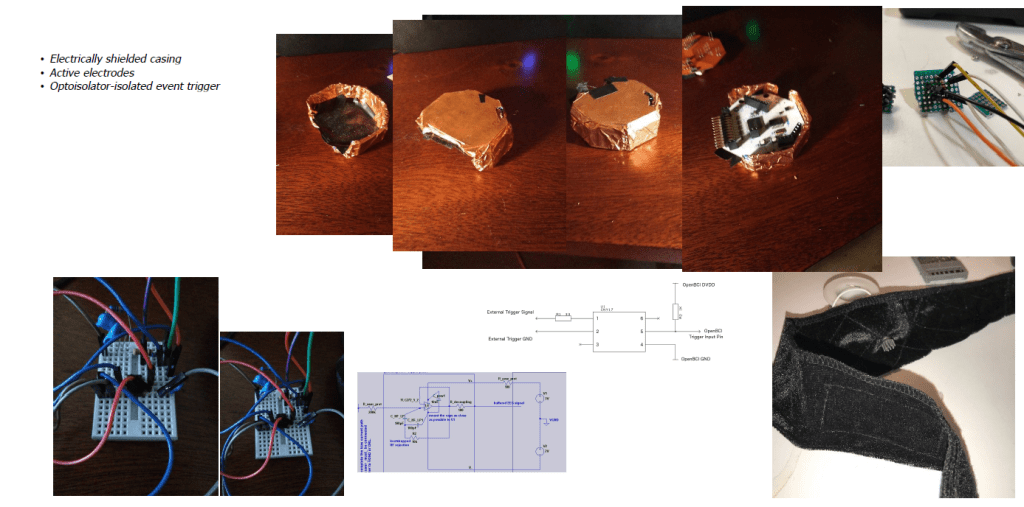

Attempt at electrical shielding:

Overall CBI Concept:

Diagram of ‘computer-to-brain interfacing’ vs ‘brain-to-computer’ interfacing concept.